What gets counted gets managed right?

What happens when the output becomes the focus rather than the variables which make up the output? Often where one measure is held up to be the pancea of all things, typically, individuals involved in measuring and who are affected by the output start gaming the process to suit their individual concerns.

Take for example the metric of teacher to children within a school system – this is the metric that is often quoted and targetted, however read here for the opposite factor when class sizes are too small or the cost of implementing such a reduction (source)

This gaming of the system creates false data and thus when people rely on this data to allocate resources we find a mismatch of what is needed versus allocated. I know why we do it, we want to hit targets, we don’t want that stat, but is warping the definition of a recordable injury a true reflection of operating conditions? Is making the numbers fit going to get you there some es and energy you need long term?

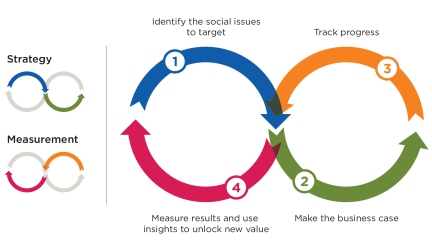

I read an interesting action that can be taken to avoid the above. For each critical measure of performance, and equal and opposite measure should be used to balance the by-product of tracking the measure.

For instance;

- Recordable (non impact) incident rates vs recordable injury rates

- Number of high risk task observations vs number of high risk tasks being undertaken

- Number of safety activities being undertaken (inspections etc) vs avg number of findings per inspection

- High level investigations completed vs sustainable engineering or above controls adopted from safety in design

- Safety advisor span of control vs supervisor span of control

- Actions from external audits vs internal revisions of process

Although binary in nature this approach makes the end user consider, prior to relying on only one metric, a more balanced and holistic approach to the multidisciplinary and multifactorial nature of generating the outcome of safety.